Publicly exposed resources are a leading cause of breaches in AWS. Security researchers will periodically do a deep dive into an AWS service, and try to divine the extent of public exposure.

I previously explored Publicly Exposed AWS Document DB Snapshots. If you haven’t seen it, check out that piece for a history of deep dives into publicly exposed AWS resources.

Today, I’ll be looking at Amazon SSM Command Documents. Read on for details on the research, including yet more evidence that Access Keys will leak anywhere there are public resources available.

SSM Command Documents

AWS Systems Manager Run Command lets you define a Command documents to bundle a command, and use IAM to manage who can run it against your instances. Run Command is super handy, I talk about it a bit in “The Path to Zero Touch Production” as a way to implement safe Script Running.

AWS provides over a hundred documents, but you can also create your own custom documents. For example, you might use a Command document to download and apply a security baseline to new instances, or to distribute a specific patch.

Publicly Exposed SSM Command Documents

SSM allows sharing Command Documents publicly. Documents are regional, and can be shared either with 1-1000 Accounts, or Publicly.

✨ AWS documentation advises against including secrets in shared documents, and points users to the (off by default) block public sharing setting at the account level.

Gathering data

You can enumerate exposed documents with a basic CLI command. However, this only works per-region. The following janky python was used to get global coverage:

import boto3

import json

def get_regions():

client = boto3.client('ec2')

return [region['RegionName'] for region in client.describe_regions()['Regions']]

def enumerate_ssm_documents(region, regional_client):

paginator = regional_client.get_paginator('list_documents')

page_iterator = paginator.paginate(

Filters=[

{

'Key': 'Owner',

'Values': [

'Public',

]

},

{

'Key': 'DocumentType',

'Values': [

'Command',

]

},

],

MaxResults=50

)

total_results = 0

document_identifiers = []

for page in page_iterator:

total_results += len(page['DocumentIdentifiers'])

document_identifiers.extend(page['DocumentIdentifiers'])

filtered_document_identifiers = [doc for doc in document_identifiers if doc['Owner'] != 'Amazon']

filtered_results = len(filtered_document_identifiers)

return total_results, filtered_results, filtered_document_identifiers

def global_enumerate_ssm_documents():

regions = get_regions()

results_metrics = {}

global_filtered_document_identifiers = []

for region in regions:

client = boto3.client('ssm', region_name=region)

total_results, filtered_results, filtered_document_identifiers = enumerate_ssm_documents(region, client)

results_metrics[region] = {

"total": total_results,

"filtered": filtered_results,

}

global_filtered_document_identifiers.extend(filtered_document_identifiers)

return global_filtered_document_identifiers, results_metrics

filtered_document_identifiers, results_metrics = global_enumerate_ssm_documents()

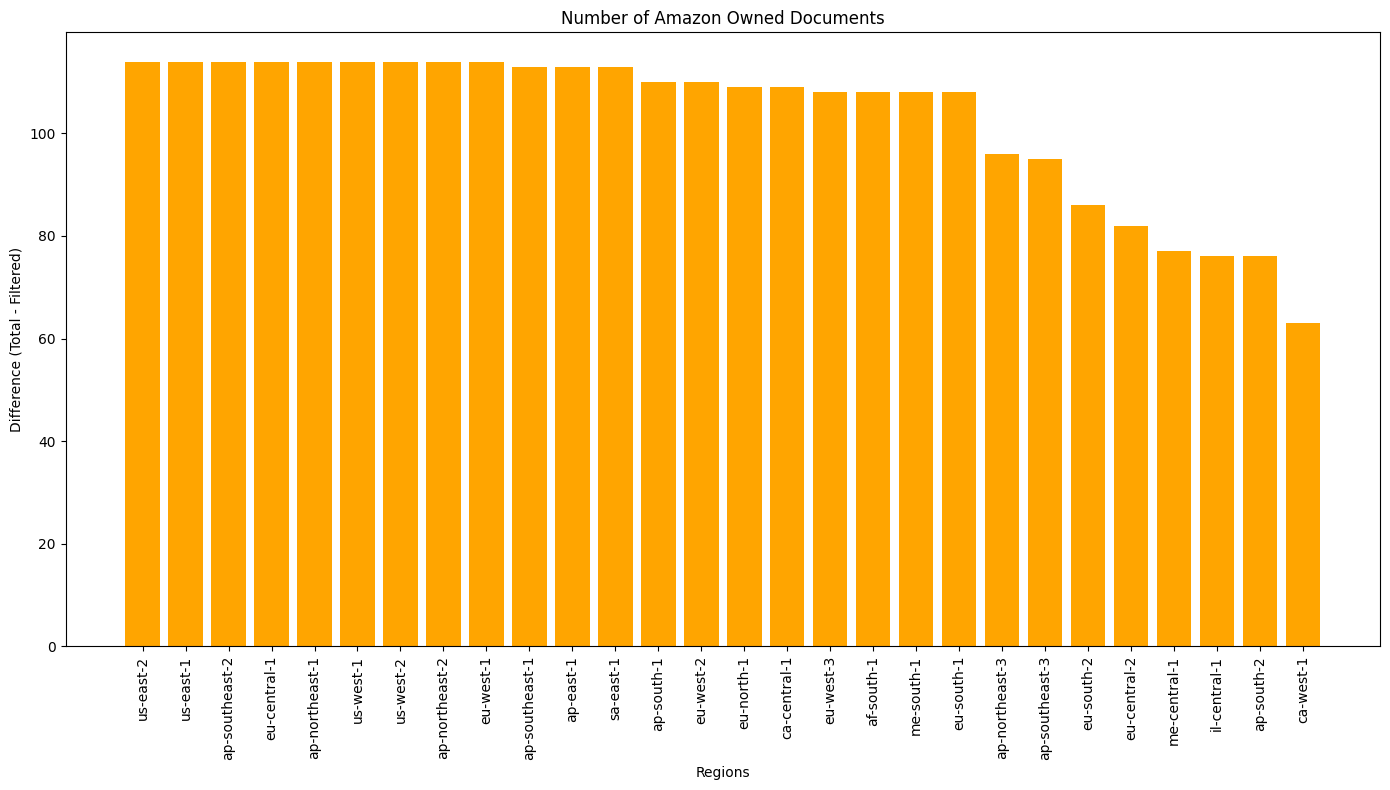

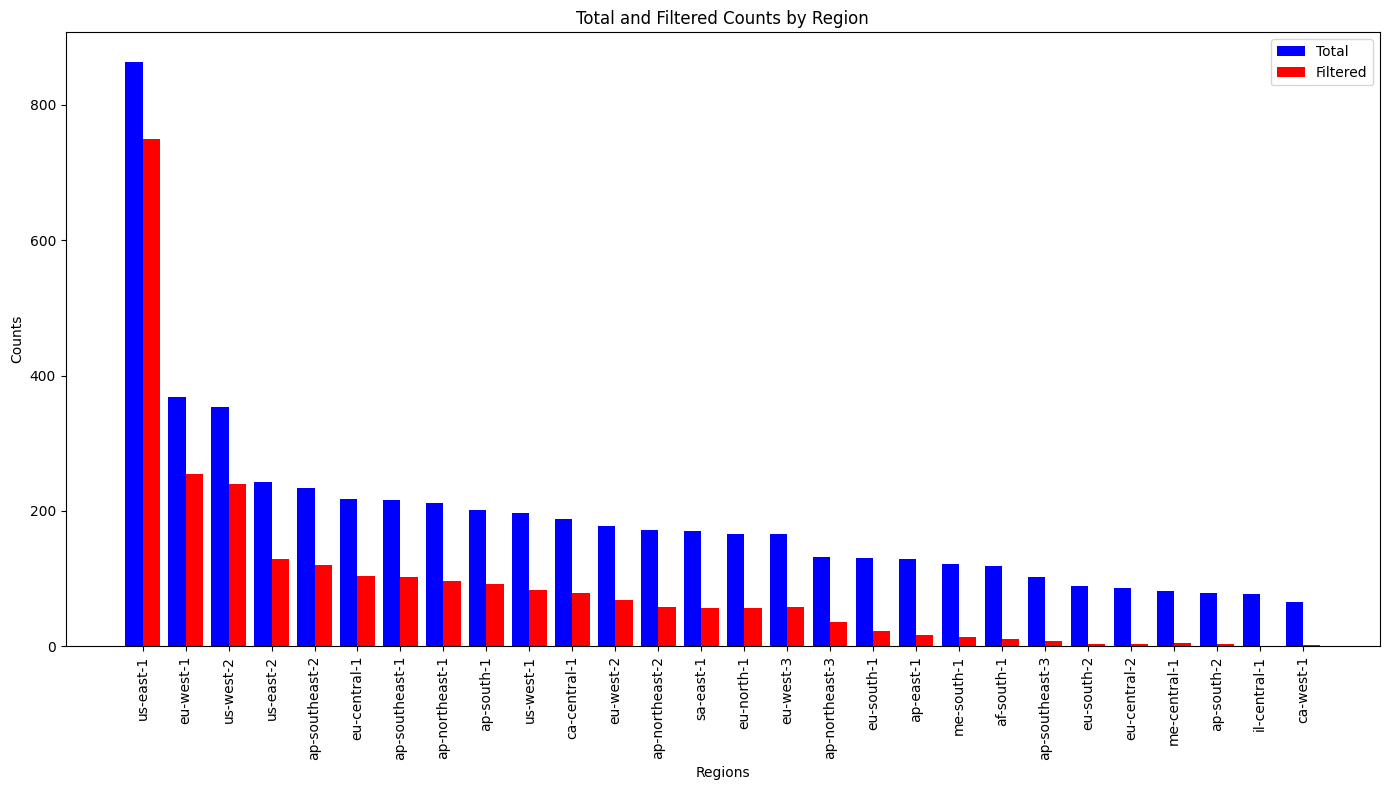

Across 28 enabled regions, there were a total of 2,472 public Command documents.

The following graph shows the total number of public documents per-region, and the “filtered” value, which removes Amazon-owned documents.

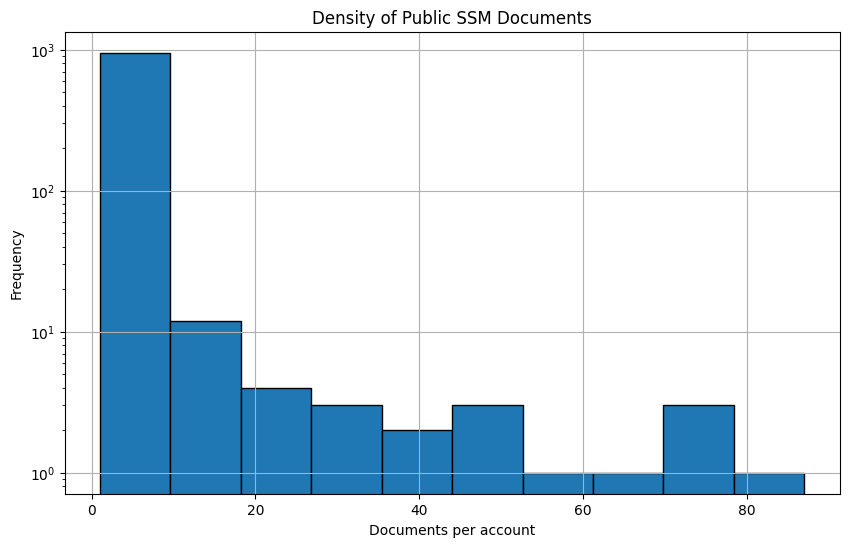

Almost 1,000 (982, to be exact) accounts shared one or more documents publicly.

Gathering contents

Each document contains one or more “steps” with an array of commands to be run sequentially.

The following code was used to:

- Download the enumerated documents

- Extract the array of commands from each step

- Turn the array into a newline delimited file, which is stored locally. The files were named based on

{region}_{account_id}_{document_name}_{step_name}

def retrieve_and_save_ssm_documents(arns):

for arn in arns:

# Parse the ARN to extract the region, account ID, and document name

parts = arn.split(':')

region = parts[3]

account_id = parts[4]

document_name = parts[5].split('/')[-1]

# Create a boto3 client for the specific region

ssm_client = boto3.client('ssm', region_name=region)

try:

# Retrieve the document content

response = ssm_client.get_document(Name=arn)

document_content = json.loads(response['Content'])

# Extract and save mainSteps runCommand contents

main_steps = document_content.get('mainSteps', [])

for step in main_steps:

step_name = step['name']

run_command = step['inputs'].get('runCommand', [])

# Create the file name

file_name = f"extracted-documents/{region}_{account_id}_{document_name}_{step_name}.sh" # Assuming shell script files

# Write the runCommand to a file

with open(file_name, 'w') as file:

file.write('\n'.join(run_command))

print(f"Step {step_name} from document {document_name} saved to {file_name}")

except ssm_client.exceptions.InvalidDocument as e:

print(f"Document {document_name} not found: {e}")

except Exception as e:

print(f"An error occurred: {e}")

names_of_filtered_document_identifiers = [dic['Name'] for dic in filtered_document_identifiers]

retrieve_and_save_ssm_documents(names_of_filtered_document_identifiers)

A total of 3,743 scripts were extracted from the 2,472 documents, with a total size of 19.9MB. Individual scripts ranged in size from 0-37KB.

Analyzing contents

Having captured all the documents locally, I wanted to try a couple things.

Trufflehog

Trufflehog is a convenient, open-source secret scanning tool.

By running trufflehog filesystem --directory=extracted-documents, I quickly was able to check all the command documents for the presence of over 700 types of credentials.

There was a single finding:

🐷🔑🐷 TruffleHog. Unearth your secrets. 🐷🔑🐷

2024-06-24T17:13:09-04:00 info-0 trufflehog --directory flag is deprecated, please pass directories as arguments

2024-06-24T17:13:09-04:00 info-0 trufflehog running source {"source_manager_worker_id": "IJYxp", "with_units": true}

✅ Found verified result 🐷🔑

Detector Type: SlackWebhook

Decoder Type: PLAIN

Raw result: {REDACTED}

Rotation_guide: https://howtorotate.com/docs/tutorials/slack-webhook/

File: extracted-documents/ap-northeast-1_291705159709_AutoScalingLogUpdate_configureServer.sh

Line: 6

✅ Found verified result 🐷🔑

Detector Type: AWS

Decoder Type: PLAIN

Raw result: {REDACTED}

Resource_type: Access key

Account: 291705159709

Rotation_guide: https://howtorotate.com/docs/tutorials/aws/

User_id: AIDAUH2X3QAOYSPNS3IOH

Arn: arn:aws:iam::291705159709:user/{REDACTED}

File: extracted-documents/ap-northeast-1_291705159709_AutoScalingLogUpdate_configureServer.sh

Line: 7

2024-06-24T17:13:10-04:00 info-0 trufflehog finished scanning {"chunks": 3933, "bytes": 9550827, "verified_secrets": 2, "unverified_secrets": 0, "scan_duration": "596.019084ms", "trufflehog_version": "3.78.2"}

The leaked IAM user appeared to have relatively restrictive permissions. Metadata suggests it belonged to a publicly traded Media company out of APAC. I uploaded the credentials to Github to ensure AWS Quarantined them. They were disabled within 24 hours of quarantine. The document has yet to be removed as of 06/26/2024 at noon ET.

✨ Pro Tip: Riding on top of Github secret scanning like this is the only good way to get credentials revoked if you don’t know the owner or don’t have a contact route. I was inspired by automated work done against PyPi.

Public S3 buckets

I noticed that some documents reference S3 buckets, generally to pull down additional artifacts or code. I was able to extract dozens of unique buckets. None of the referenced artifacts that were publicly readable or buckets that were publicly listable were particularly sensitive.

Publicly readable artifacts included configuration files, additional scripts, and mirrors of public binaries for third party software. One publicly readable script contained a hard coded API key, but it’s usage doesn’t seem particularly risky.

Interesting Commands

Public commands offer an interesting glimpse inside cloud security programs.

Some of the more interesting commands included:

- A log4jFileSystemQuery command that stored results in

s3://cerner-ssm-log4jscan - A linux memory acquisition command that used AVML (and

s3://pinterest-evidence-locker) - Attempting to configure CrowdStrike exclusions, with an unclaimed typo-d bucket. I’ve reserved the bucket now for safety.

Takeaways

- AWS should offer security by default, and invert their current block public sharing setting to be enabled by default. This would match improvements made elsewhere.

- AWS should offer a path to report issues to customers regarding vulnerabilities in their AWS environments. At the very least, I recommend they implement a way to securely report credentials leaked, such as an

aws sts report-leaked-credentialAPI call. The current unwillingness to Invent and Simplify here demonstrate a lack of the Customer Obsession we hope to see from them. - AWS should strongly consider performing basic secret scanning for AWS credentials in all classes of publicly exposed resources. The fact that this is so viable to do as an external party should demonstrate the opportunity they have to embrace Frugality with a small improvement that is more commensurate with their Broad Responsibility.

- There are two axioms of AWS resources: 1) if a resource can be exposed publicly, someone will do so unintentionally, and 2) if a resource can have secrets shoved in it, someone will do so – these pair poorly